Despite the teething problems I had getting my head around RequireJS, I had another go this week on sorting this out. The motivation for this was Knockout Components – re-usable asynchronous web components that work with Knockout. So this article details the steps required to make this work on a vanilla ASP.NET MVC website.

Components

If you’re not familiar with components, they are similar to ASP.NET Web Controls – re-usable, modular components that are loosely coupled – but all using client-side JS and HTML.

Although you can use and implement components without an asynchronous module loader, it makes much more sense to do so and to modularise your code and templates. This means you can specify the JS code and or HTML template is only loaded at runtime, asynchronously, and on-demand.

Demo Project

To show how to apply this to a project, I’ve created a GitHub repository with a web app. Step one was to create the ASP.NET MVC application. I used .NET 4.5 and MVC version 5 for this but older versions would work just as well.

Next I upgraded all the default Nuget packages including JQuery so that we have the latest code, and then amended the home page to remove the standard ASP.NET project home page. So far, nothing new.

RequireJS

Next step is to add RequireJS support. At present our app loads the JavaScript modules synchronously from the HTML pages, using the ASP.NET bundler.

public static void RegisterBundles(BundleCollection bundles)

{

bundles.Add(new ScriptBundle("~/bundles/jquery").Include(

"~/Scripts/jquery-{version}.js"));

These are inserted via the _Layout.cshtml template:

@Scripts.Render("~/bundles/jquery")

@Scripts.Render("~/bundles/bootstrap")

@RenderSection("scripts", required: false)

First we add RequireJS to the web app from the Nuget project, and the Text Addon for RequireJS. This is required to allow us to load non-JavaScript items (e.g. CSS and HTML) using RequireJS. We’ll need that to load HTML templates. These will load into the /Scripts folder.

Configuring RequireJS

Next, we create a configuration script file for RequireJS. Mine looks like this, yours may be different depending on how your scripts are structured:

require.config({

baseUrl: "/Scripts/",

paths: {

jquery: "jquery-2.1.1.min",

bootstrap: "bootstrap.min"

},

shim: {

"bootstrap": ["jquery"]

}

});

This configuration uses the default Scripts folder, and maps the module ‘jquery’ to the version of JQuery we have updated to. We’ve also mapped ‘bootstrap’ to the minified version of the Bootstrap file, and added a shim that tells RequireJS that we need to load JQuery first if we use Bootstrap.

I created this file in /Scripts/require/config.ts using TypeScript. At this point TypeScript is flagging an error saying that require is not defined. So now we need to add some TypeScript definition files. The best resource for these is the Github project Definitely Typed, and these are all on Nuget to make it even easier. We can do this from the Package Manager console:

1: Install-Package jquery.TypeScript.DefinitelyTyped

2: Install-Package bootstrap.TypeScript.DefinitelyTyped

3: Install-Package requirejs.TypeScript.DefinitelyTyped

Implementing RequireJS

At this point we have added the scripts but not actually changed our application to use RequireJS. To do this, we open the _Layout.cshtml file, and change the script segment to read as follows:

<script src="~/Scripts/require.js"></script>

<script src="~/Scripts/require/config.js"></script>

@* load JQuery and Bootstrap *@

<script>

require(["jquery", "bootstrap"]);

</script>

@RenderSection("scripts", required: false)

This segment loads require.js first, then runs the config.js file which configures RequireJS.

Important: Do not use the data-main attribute to load the configuration as I originally did – you’ll find that you cannot guarantee that RequireJS is properly configured before the require([…]) method is called. If the page loads with errors, e.g. if the browser tries to load /Scripts/jquery.js or /Scripts/bootstrap.js then you’ve got it wrong.

Check the network load for 404 errors in the browser developer tools.

Adding Knockout

We add Knockout version 3.2 from the package manager along with the TypeScript definitions as follows:

install-package knockoutjs

Install-Package knockout.TypeScript.DefinitelyTyped

You need at least version 3.2 to get the Component support.

I then modified the configuration file to add a mapping for knockout:

require.config({

baseUrl: "/Scripts/",

paths: {

jquery: "jquery-2.1.1.min",

bootstrap: "bootstrap.min",

knockout: "knockout-3.2.0"

},

shim: {

"bootstrap": ["jquery"]

}

});

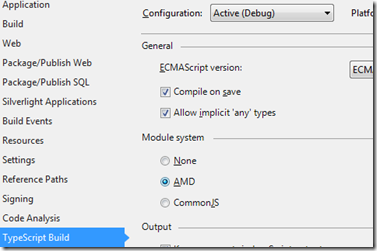

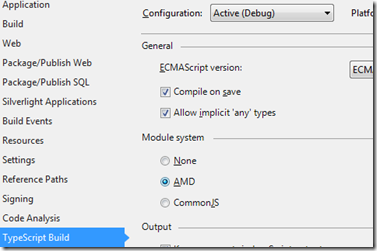

Our last change is to change the TypeScript compilation settings to create AMD output instead of the normal JavaScript they generate. We do this using the WebApp properties page:

This allows us to make us of require via the import and export keywords in our TypeScript code.

We are now ready to create some components!

Demo1 – Our First Component: ‘click-to-edit’

I am not going to explain how components work, as the Knockout website does an excellent job of this as do Steve Sanderson’s videos and Ryan Niemeyer’s blog.

Instead we will create a simple page with a view model with an editable first and last name. These are the steps we take:

- Add a new ActionMethod to the Home controller called Demo1

- Add a view with the same name

- Create a simple HTML form, and add a script section as follows:

<p>This demonstrates a simple viewmodel bound with Knockout, and uses a Knockout component to handle editing of the values.</p>

<form role="form">

<label>First Name:</label>

<input type="text" class="form-control" placeholder="" data-bind="value: FirstName" />

@* echo the value to show databinding working *@

<p class="help-block">

Entered: {<span data-bind="text: FirstName"></span>}

</p>

<button data-bind="click: Submit">Submit</button>

</form>

@section scripts {

<script>

require(["views/Demo1"]);

</script>

}

- We then add a folder views to the Scripts folder, and create a Demo1.ts TypeScript file.

// we need knockout

import ko = require("knockout");

export class Demo1ViewModel {

FirstName = ko.observable<string>();

Submit() {

var name = this.FirstName();

if (name)

alert("Hello " + this.FirstName());

else

alert("Please provide a name");

}

}

ko.applyBindings(new Demo1ViewModel());

This is a simple viewmodel but demonstrates using RequireJS via an import statement. If you use a network log in the browser development tools, you’ll notice that the demo1.js script is loaded asynchronously after the page has finished loading.

So far so good, but now to add a component. We’ll create a click-to-edit component where we show a text observable as a label that the user has to click to be able to edit.

Click to Edit

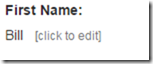

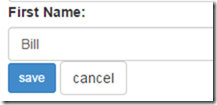

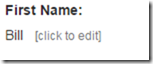

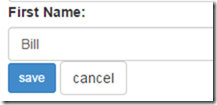

Our component will show the current value as a span control, but if the user clicks this, it changes to show an input box, with Save and Cancel buttons. If the user edits the value then clicks cancel, the changes are not saved.

view mode and

view mode and  edit mode

edit mode

To create this we’ll add three files in a components subfolder of Scripts:

- click-to-edit.html – the template

- click-to-edit.ts – the viewmodel

- click-to-edit-register.ts – registers the component

The template and viewmodel should be simple enough if you’re familiar with knockout: we have a viewMode observable that is true if in view mode, and false if editing. Next we have value – an observable string that we will point back to the referenced value. We’ll also have a newValue observable that binds to the input box, so we only change value when the user clicks Save.

TypeScript note: I found that TypeScript helpfully removes ‘unused’ imports from the compiled code, so if you don’t use the resulting clickToEdit object in the code, it gets removed from the resulting JavaScript output. I added the line var tmp = clickToEdit; to force it to be included.

Registering and Using the Component

To use the component in our view, we need to register it, then we use it in the HTML.

Registering we can do via import clickToEdit = require(“click-to-edit-register”); at the top of our Demo1 view model script. The registration script is pretty short and looks like this:

import ko = require("knockout");

// register the component

ko.components.register("click-to-edit", {

viewModel: { require: "components/click-to-edit" },

template: { require: "text!components/click-to-edit.html" }

});

The .register() method has several different ways of being used. This version is the fully-modular version where the script and HTML templates are loaded via the module loader. Note that the viewmodel script has to return a function that is called by Knockout to create the viewmodel.

TypeScript note: the viewmodel code for the component defines a class, but to work with Knockout components the script has to return a function that is used to instantiate the viewmodel, in the same form as this script. To do this, I added a line return ClickToEditViewModel; at the end of this module. This appears to return the class, which of course is actually a function that is the constructor. This function takes a params parameter that should have a value property that is the observable we want to edit.

Using the component is easy: we use the name that we used when it was registered (click-to-edit) as if it were a valid HTML tag.

<label>First Name:</label>

<div class="form-group">

<click-to-edit params="value: FirstName"></click-to-edit>

</div>

We use the params attribute to pass the observable value through to the component. When the component changes the value, you will see this reflected in the page’s model.

Sequence of Events

It’s interesting to follow through what happens in sequence:

- We navigate to the web URL /Home/Demo1, which maps to the controller action Demo1

- The view is returned, which only references one script “views/Demo1” using require()

- RequireJS loads the Demo1.js script after the page has finished loading

- This script references knockout and click-to-edit-register, which are both loaded before the rest of the script executes

- The viewmodel binds using applyBindings(). Knockout looks for registered components and finds the <click-to-edit> tags in our view, so it makes requests to RequireJS to load the viewModel script and the HTML template.

- When both have been loaded, the find binding is completed.

It’s interesting to watch the network graph of the load:

The purple vertical line represents when the page finished loading, after about 170ms – about half way through the page being completed and ready. In this case I had cleared the cache so everything was loaded fresh. The initial page load only has just over 33KB of data, whereas the total loaded was 306KB. This really helps make sites more responsive on a first load.

Another feature of Knockout components is that they are dynamically loaded only when they are used. If I had a component used for items in an observable array, and the array was empty, then the components’ template and viewModel would not be used. This is really great if you’re creating Single Page Applications.

Re-using the Component

One of the biggest benefits of components is reuse. We can now extend our viewModel in the page to add a LastName property, and use the click-to-edit component in the form again:

<label>First Name:</label>

<div class="form-group">

<click-to-edit params="value: FirstName"></click-to-edit>

</div>

<label>Last Name:</label>

<div class="form-group">

<click-to-edit params="value: LastName"></click-to-edit>

</div>

Now the page has two values using the same control, independently bound.

OpenAuth

OpenAuth When you arrive at reception, they check your identity, and once they know who you are, they issue a card key and a PIN number to renew it. This hotel uses electronic card keys and for security reasons they stop working after an hour.

When you arrive at reception, they check your identity, and once they know who you are, they issue a card key and a PIN number to renew it. This hotel uses electronic card keys and for security reasons they stop working after an hour.